How Injection Attacks Are Evolving: Why Fraud Fighters Need to Stay a Step Ahead

Identity verification (IDV) platforms are under siege. While deepfakes and AI-generated images dominate the fraud conversation, what’s often overlooked is how these manipulated images enter the system.

Fraudsters have long used presentation attacks—where they show printed photos, screen images, or even sophisticated masks—to fool verification checks. Though presentation attacks still occur frequently, they’re generally less sophisticated compared to newer methods. Increasingly, attackers are using more direct and technical approaches, known as injection attacks, where manipulated media is inserted directly into the verification pipeline.

One particularly troubling subset that is trending more is camera injection attacks, where fraudsters intercept or hijack the live camera feed itself. Instead of genuine real-time selfies or document images, attackers inject altered or repurposed content—often taken from publicly accessible sources and subtly modified with basic editing or AI-based tools. Because camera injection attacks exploit the inherent trust IDV platforms place in camera input, they’re especially difficult to detect without targeted defenses.

In developer forums, such as those discussing OpenAI’s recent document verification rollout, frustrated users openly share methods to bypass ID checks—even explicitly recommending a camera injection attack as workarounds. These discussions underscore a harsh reality: if your IDV platform isn’t designed with injection resistance at its core, it will be exploited. Every time.

What Is a Camera Injection Attack?

A camera injection attack occurs when fraudsters intercept or manipulate the real-time data stream from a device’s camera before it reaches the identity verification (IDV) platform. Instead of transmitting genuine live imagery, attackers inject pre-recorded videos, static images, or subtly altered media directly into the camera input stream.

Simple Illustration of a Camera Injection Attack:

- The IDV application requests live input from the device’s webcam or smartphone camera using an API.

- A malicious application or script intercepts this camera request.

- Instead of the genuine live camera feed, the attacker injects a previously prepared image or video clip.

- The IDV platform, unaware of the manipulation, treats this injected content as authentic live data.

These attacks are most commonly executed on rooted Android devices, jailbroken iPhones, or desktop systems where fraudsters have higher-level access, enabling them to easily override camera permissions and simulate a legitimate real-time capture.

The Shift: From Deepfakes to Smart Tweaks

Initially, fraudsters heavily depended on sophisticated deepfake images to trick biometric verification systems. Recently, however, we’ve observed a notable shift in tactics toward simpler yet equally deceptive methods:

- Repurposed real headshots: Attackers now often source existing headshots from publicly accessible places like inmate databases, corporate websites, or social media profiles.

- Subtle image alterations: Instead of creating entirely synthetic faces, attackers apply basic manipulations—such as cropping, flipping, stretching, or subtly extending the image using AI—to mimic authentic selfies.

- Collages and composite images: Attackers splice multiple real images together, blending authentic elements to form seemingly new images that can evade detection.

This shift from fully synthetic deepfakes to AI-augmented repurposed photos presents a greater detection challenge. These subtly altered images retain enough realism to bypass basic liveness checks, particularly when platforms don’t examine behavioral or contextual inconsistencies.

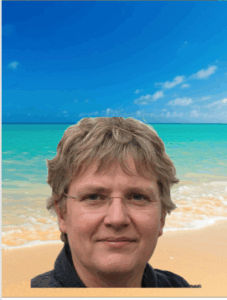

Images created by AI to show the attack vector where images from publicly available sources are used and AI can be used to extend the images to other environments

Background Complexity as a New Tactic

Fraudsters have also started paying closer attention to image backgrounds. Blank or uniform backgrounds often raise suspicion, prompting attackers to insert more realistic settings like beaches, bookshelves, or urban backdrops to mimic authenticity. In a recent case analyzed by Socure’s fraud research team, an injected image showing a woman at the beach turned out to be a collage of two separate images—an AI-generated background layered behind a stolen headshot.

An AI generated image showcasing the attack vector where two images are combined to create layered background effects.

AI Extensions Instead of Entirely Synthetic Deepfakes

There’s a growing trend of fraudsters using AI tools not to create entirely fake faces, but rather to subtly manipulate real ones. For instance:

- A cropped, genuine headshot is extended or reshaped by AI to resemble a selfie.

- Real facial features are slightly altered—changing angles or expressions—to evade traditional deepfake detection.

Images created by AI to show the attack vector where a synthetic deepfake is extended to make it look like a matching selfie

Images created by AI to show the attack vector where a synthetic deepfake is manipulated with different expressions or environment

These tactics indicate that identity verification platforms must expand their scope beyond basic facial similarity. When most of the image is authentic and only specific elements have been modified, traditional detection tools often fail.

How to Detect the New Breed of Injection Attacks

At Socure, we’ve adopted an identity-centric approach, rather than focusing narrowly on just document or image verification. This means placing the user’s overall identity at the heart of our verification strategy and analyzing multiple dimensions simultaneously—not just images.

We perform rigorous forensic examinations of documents and selfies, checking meticulously for signs of manipulation, presentation attacks, deepfakes, biometric mismatches, age discrepancies, repeated faces, and dozens of additional forensic indicators. But our analysis doesn’t stop at images alone. We incorporate numerous identity signals—including device analytics, device-user associations, device velocity, phone number risk, email and address risk, behavioral anomalies, contextual discrepancies within submitted personal information, intelligence from known fraud attempts, and signals derived from our graph network spanning over 2,000 customers—and the list continues to grow.

This comprehensive defense-in-depth strategy ensures a smooth, frictionless verification experience for legitimate users, while simultaneously creating multiple challenging barriers for fraudsters.

Some of the layers in this approach include:

- Behavioral Tracking: Identifying anomalies in user interactions and camera alignment timing, and looking for signs of emulators and other softwares instead of live capture.

- Static Image & Loop Detection: Spotting unnatural repetition, static expressions, or backgrounds indicative of injection.

- Contextual & Metadata Analysis: Assessing lighting, shadow consistency, backgrounds, and frame-by-frame facial landmark transitions.

- Re-used Image flagging: Flagging images that are reused with different identities but also when the same image is being re-used as both headshot and selfie.

These represent just a fraction of the extensive measures we leverage to combat injection attacks, ensuring that even sophisticated attempts rarely succeed.

Even if attackers bypass one or two detection layers, numerous additional defenses await them, significantly reducing their likelihood of success.

Conclusion: Don’t Rely on Deepfake Detection Alone

Injection attacks are rapidly evolving. Fraudsters increasingly favor subtle manipulations of real images rather than entirely synthetic creations. With millions of high-quality photos available online, it’s simpler—and often more effective—for attackers to repurpose and slightly alter genuine faces rather than build convincing fakes from scratch.

If your verification platform isn’t prepared to detect anomalies across multiple dimensions—behavioral, contextual, temporal, and forensic—it remains vulnerable. Staying ahead of modern identity fraud demands much more than standard liveness checks; it requires understanding attacker strategies deeply and anticipating their next move.

Injection attacks are the new frontline. Make sure your platform is ready.

Deepanker Saxena